Generative AI security is rapidly becoming a critical aspect of organizational risk management as businesses increasingly adopt advanced AI technologies. As generative AI continues to evolve, it introduces unique challenges that necessitate comprehensive AI risk assessment strategies to ensure data integrity and compliance. Companies engaging in enterprise AI usage must be aware of potential machine learning security threats, including vulnerabilities within large language models (LLMs) that can arise from biased training data or inadequate data handling practices. Moreover, the formulation of effective AI policies is essential to mitigate these risks and maintain a safe environment for innovation. Without a structured approach to address these challenges, organizations may inadvertently expose themselves to data breaches or misuse of sensitive information.

The security landscape surrounding generative artificial intelligence is becoming increasingly complex, as organizations integrate innovative AI services into their workflows. With terms like computational threat assessment and responsible AI deployment at the forefront of discussions, leaders are now tasked with understanding the implications of utilizing such technologies. As enterprises explore the adaptability of AI tools, the necessity of effective oversight becomes apparent—this includes evaluating the integrity of the AI models driving these applications. By proactively addressing the potential vulnerabilities present in generative platforms, businesses can not only foster a safer work environment but also enhance their overall decision-making processes. Hence, navigating the intricacies of AI usage is a crucial step toward safeguarding organizational assets and promoting sustainable growth in a digitally-driven world.

Understanding Generative AI Security Challenges

The surge in generative AI applications, particularly large language models (LLMs), has introduced significant security challenges that organizations must navigate. As employees increasingly leverage these technologies, concerns about data integrity and privacy are paramount. For instance, if employees utilize unverified generative AI services, they may unwittingly jeopardize sensitive information by uploading proprietary data. This precarious situation underlines the importance of a structured approach to generative AI security, especially when it comes to assessing the impact of AI risk factors on overall business operations.

Additionally, generative AI security extends beyond just user practices; it encompasses the complexities of underlying models. Security leaders must ensure that these models are not only compliant but also resilient against vulnerabilities such as unauthorized access or exploitation. As enterprises adopt AI technologies for innovation, it is critical to formulate robust security policies that address both service and model risks, thus safeguarding the organization from potential breaches or compliance violations.

Frequently Asked Questions

What are the main security concerns associated with generative AI in enterprise AI usage?

Generative AI, while enhancing creativity and efficiency, introduces security concerns like unauthorized data exposure, LLM vulnerabilities, and misuse of AI capabilities. Organizations must assess these risks to safeguard sensitive information during enterprise AI usage.

How does AI risk assessment help mitigate risks linked to generative AI technologies?

AI risk assessment identifies potential threats and weaknesses in generative AI implementations, such as biases in training data or vulnerabilities in LLMs. By conducting thorough assessments, organizations can formulate effective AI policies that protect against these risks.

What are LLM vulnerabilities and why are they important in generative AI security?

LLM vulnerabilities refer to weaknesses in large language models that can be exploited, such as generating toxic content or providing biased outputs. Understanding these vulnerabilities is crucial for ensuring secure and responsible use of generative AI in organizations.

How can enterprises formulate effective AI policies for safe generative AI usage?

Enterprises should establish AI policies by assessing both service and model risks associated with generative AI. This includes evaluating data privacy, compliance requirements, and implementing strategies for continuous monitoring and improvement to promote secure AI use.

What technologies can assist organizations in addressing generative AI security challenges?

Technologies designed for AI risk assessment can streamline the evaluation of generative AI services and their underlying models. These tools can provide insights into managing potential threats, thereby enhancing security in enterprise AI usage.

Why is it essential to differentiate between the service and the underlying model in generative AI applications?

Differentiating between the generative AI service and its underlying model is vital, as each has unique security implications. The service presents user interaction challenges, while model vulnerabilities can affect the entire reliability and safety of AI outputs.

What steps can organizations take to promote responsible use of generative AI while managing risks?

Organizations can promote responsible generative AI usage by conducting comprehensive AI risk assessments, implementing robust security policies, and selecting technologies that offer visibility into AI service risks, thus balancing innovation and security.

How can AI risk assessments reveal biases within LLM training data?

AI risk assessments can analyze the training data used in LLMs to identify inherent biases that may influence output quality. By scrutinizing this data, organizations can mitigate the risks associated with biased content in generative AI applications.

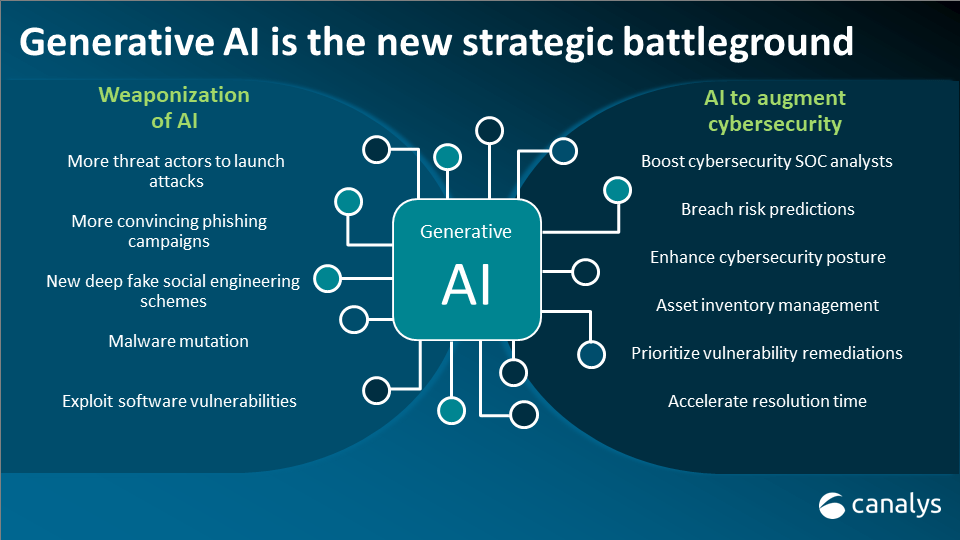

What are the implications of generative AI being exploited to create malware or phishing attacks?

Generative AI’s capability to produce realistic content can be weaponized for malware creation or phishing attacks, posing significant security risks. Organizations must employ vigilant monitoring and risk management strategies to detect and prevent these malicious uses.

How does increasing compliance with AI policies impact generative AI security?

Increasing compliance with AI policies enhances generative AI security by establishing clear guidelines for usage, promoting ethical standards, and reducing risks associated with unauthorized data access and information leaks.

| Key Points | Details |

|---|---|

| Generative AI Adoption | Generative AI is being increasingly utilized in workplaces to foster creativity, research, and idea generation. |

| Security Challenges | Increased use of generative AI raises risks, such as uploading sensitive data to unapproved services. |

| Importance of Risk Assessment | Organizations must assess risks associated with both AI services and their underlying models to improve security. |

| Service vs. Model | The service refers to the user interface, while the model is the AI algorithm operating behind it. |

| Common Model Risks | Models may produce biased, toxic, or harmful content, or even be exploited for cyberattacks. |

| Promoting Responsible AI Usage | Leaders can strike a balance by leveraging technology to assess AI risks and enable safe usage. |

Summary

Generative AI security is an essential topic that leaders must prioritize to safeguard their organizations. With the rise of generative AI technologies, the potential for misuse and unforeseen security risks necessitates a proactive approach. By understanding the nuances between AI services and their underlying models, and effectively assessing associated risks, businesses can leverage the benefits of generative AI while protecting sensitive information and maintaining compliance. This balanced perspective will enable innovation without sacrificing safety.